Linear regression, whether simple or multiple, relies on several key assumptions to ensure that the model is valid and produces reliable results. If these assumptions are violated, the model’s predictions and interpretations may be incorrect or misleading.

1 –Below are the key assumptions of linear regression:

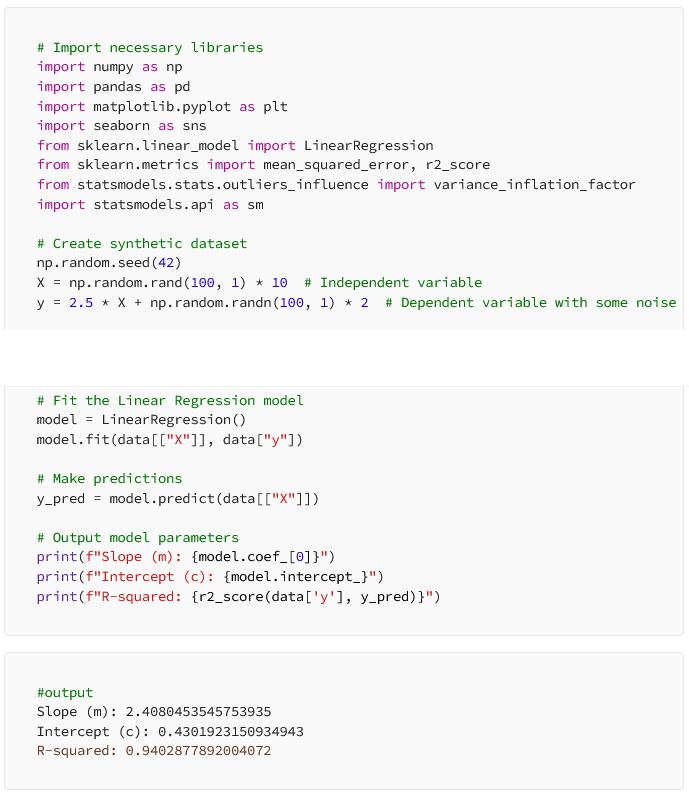

To demonstrate how to check and handle the assumptions of linear regression, I’ll provide a Python example using a synthetic dataset. We will walk through each assumption step by step, using visualization and statistical tests where necessary.

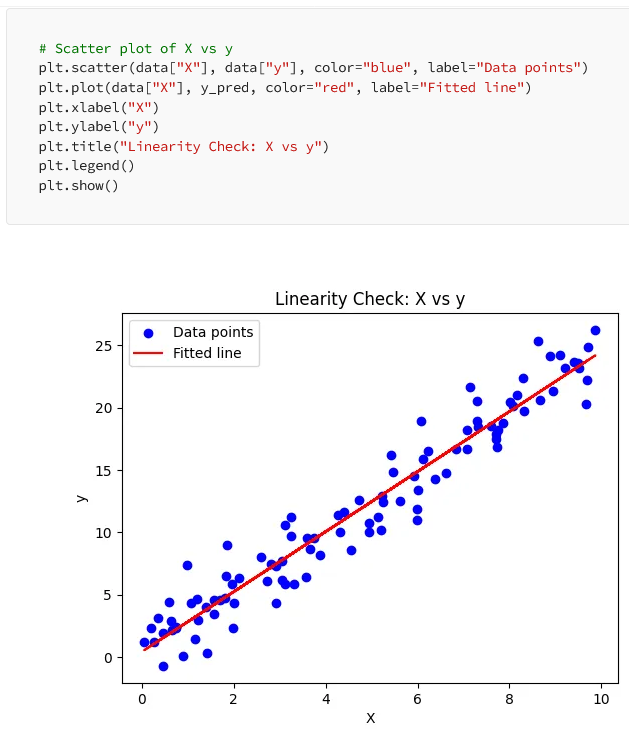

1. Linearity

Definition: There is a linear relationship between the dependent variable and the independent variable(s).

Implication: The change in the dependent variable is proportional to the change in the independent variable(s).

Violation: If the relationship is non-linear (e.g., exponential or polynomial), a linear regression model will not fit the data well, leading to inaccurate predictions.

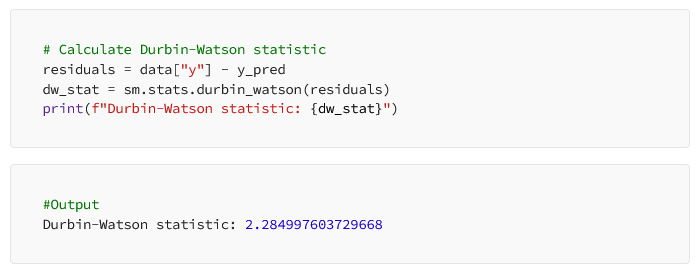

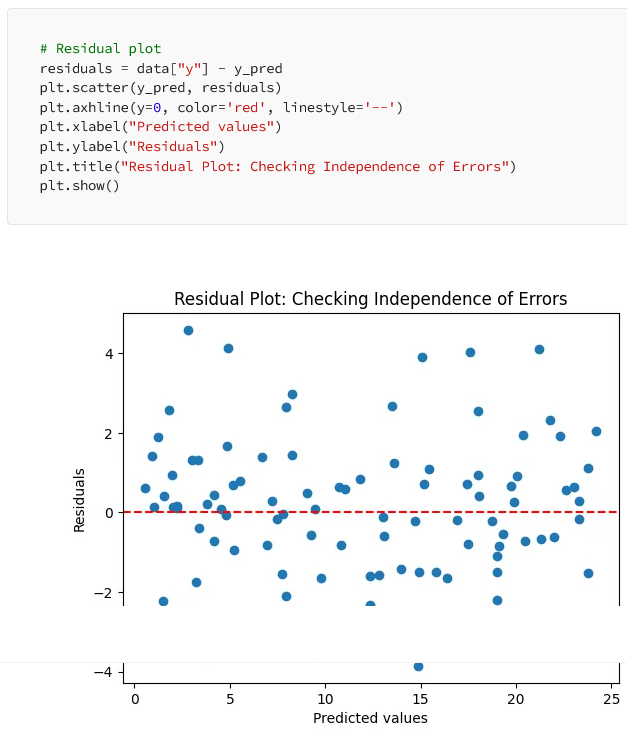

2 – Independence of Errors (No Autocorrelation)

Definition: The residuals (errors) are independent of each other.

Implication: There is no pattern or correlation between residuals; they should be random.

Violation: If the residuals are correlated (autocorrelation), this indicates that the model is missing an important variable or time-dependent patterns, especially in time-series data.

Durbin-Watson test is commonly used to detect autocorrelation.

The Durbin-Watson Test is used to detect autocorrelation(Relation between Residuals).The value of the Durbin-Watson statistic range from 0 to 4.

1. the value near 2 indicated no autocorrelation.

2. the value towards 0 indicate positive correlation.

3. the value towards 4 indicate negative correlation.

A Durbin-Watson statistic of 2.28 is slightly greater than 2, which suggests that there is no significant autocorrelation in your residuals. This value is very close to the ideal value of 2, indicating that the residuals are largely independent of each other, which is good for our linear regression model.

Monika Kashyap

Residuals should not be correlated. We also can check this visually using a residual plot.

In this plot, the residuals should be scattered randomly around 0. If there’s any discernible pattern (e.g., funnel shape), the independence assumption may be violated.

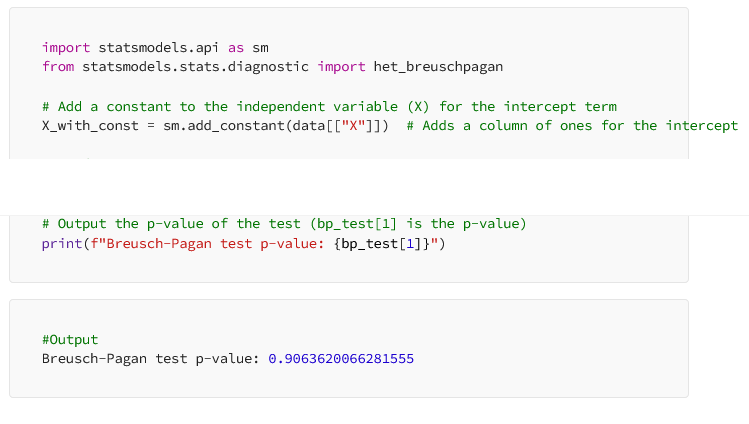

#3. Homoscedasticity

We want to check if the residuals have constant variance. This can also be visualized using the residual plot (same as above). In addition, we can perform a statistical test like the Breusch-Pagan test.

Definition: The residuals have constant variance across all levels of the independent variables.

Implication: The spread of residuals should be consistent, and not increase or decrease as the independent variable changes.

Violation: If the residuals show increasing or decreasing spread (heteroscedasticity), it indicates that the model’s error terms do not have constant variance. This can lead to inefficient estimates. A plot of residuals vs. predicted values can be used to check for homoscedasticity.

A high p-value indicates that there is no evidence of heteroscedasticity (i.e., the residuals have constant variance).

The output will show the p-value. A p-value > 0.05 suggests that homoscedasticity (constant variance of residuals) is not violated.

Handling Heteroscedasticity: Try transforming

y (e.g., log transformation: np.log(y)) or use weighted least squares regression.

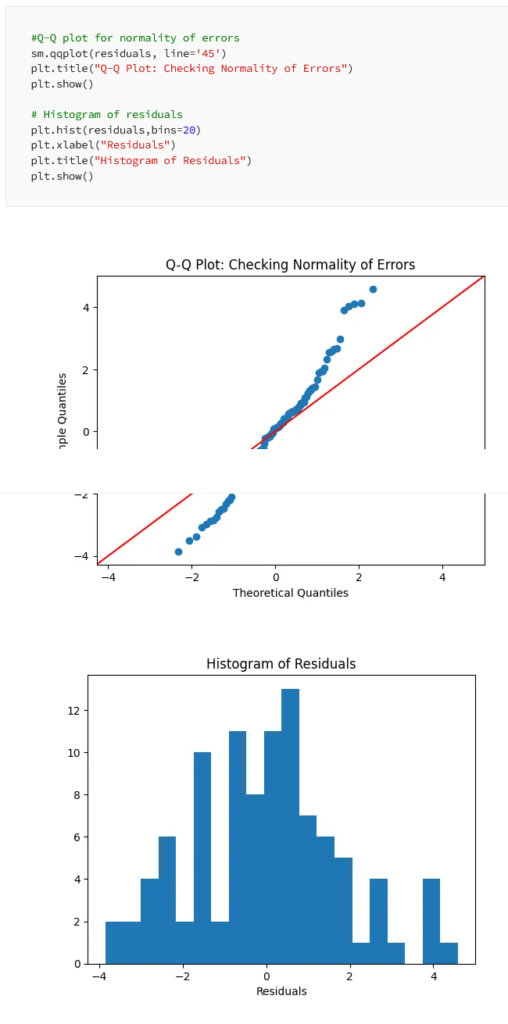

#4. Normality of Errors

Definition: The residuals (errors) should follow a normal distribution.

Implication: This assumption is particularly important for making valid statistical inferences, such as hypothesis tests and confidence intervals.

Violation: If the errors are not normally distributed, it could affect the validity of confidence intervals and hypothesis tests. This can be assessed using a Q-Q plot or a histogram of the residuals.

In the Q-Q plot, residuals should fall along the straight line if they are normally distributed. The histogram should show a bell-shaped curve.

#5. No Multicollinearity (for Multiple Linear Regression)

The no multicollinearity assumption in multiple linear regression implies that the predictor variables (independent variables) are not highly correlated with each other. When multicollinearity exists, it becomes difficult to determine the individual impact of each predictor on the target variable because they share similar information, leading to unstable and unreliable coefficient estimates.

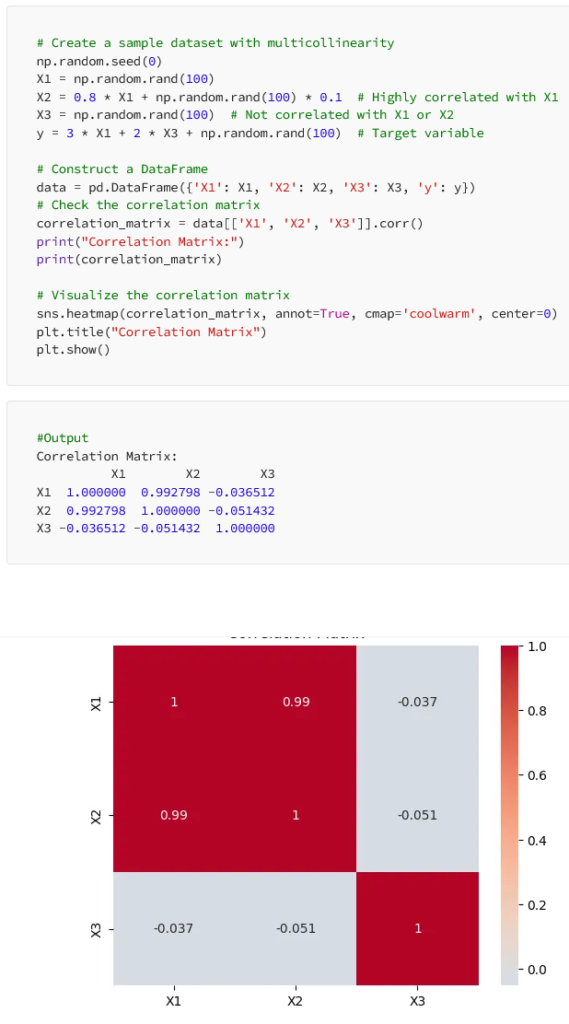

Detecting Multicollinearity using the correlation matrix.

This high correlation between X1 and X2 is a sign of multicollinearity, which can be problematic in linear regression models. Multicollinearity makes it difficult to isolate the individual effects of the correlated variables on the target variable (y). It can lead to unstable coefficient estimates and make it harder to interpret the model’s results.

Handling Multicollinearity: Remove or combine variables, or use regularization methods like Ridge or Lasso regression to minimize multicollinearity’s impact on model performance.

#6. No Measurement Error in Predictors

The model assumes that the independent variables are measured without error. Measurement errors in the independent variables can bias the results. It’s challenging to assess this directly. You could look at reliability metrics or use reliable measurement tools.

Overall:

By understanding and verifying the assumptions of linear regression, data scientists can build more robust and reliable models that provide meaningful insights and accurate predictions. The techniques and tools presented above serve as a valuable foundation for conducting thorough linear regression analysis.